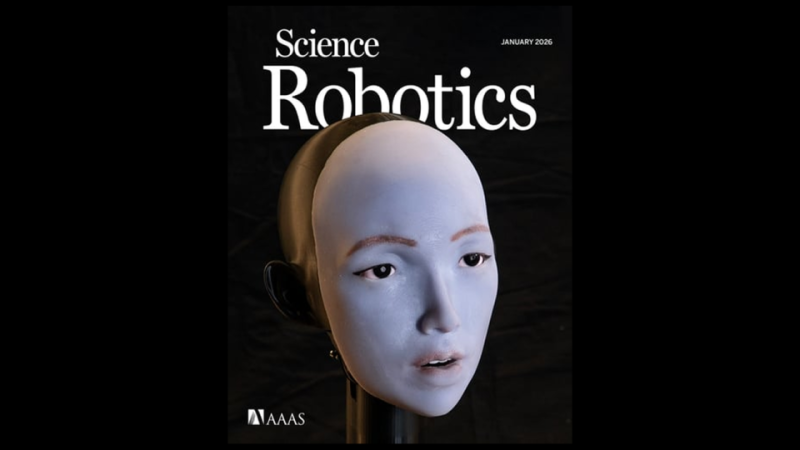

In a new step bringing robots closer to the human world, researchers have announced a notable technological achievement: the development of a robot capable of learning and mastering one of the most complex human facial movements: lip movements during speech and singing.

This advancement could represent a turning point in the future of humanoid robots, especially since nearly half of human attention during direct interaction is focused on facial expressions and lip movement, making us highly sensitive to any flaw or lack of harmony in these movements.

Until recently, robots faced significant difficulty in mimicking the natural way humans move their lips, often appearing uncoordinated or “strange.”

This phenomenon is scientifically known as the “uncanny valley,” the feeling of unease that overcomes a person when seeing something that appears almost human but does not move or behave in a completely natural way. However, this reality may change soon.

How did the robot learn to move its lips?

On Wednesday, engineers unveiled a new robot that, for the first time, managed to learn and reproduce human lip movements during speech and even singing.

The robot learned to use 26 motors in its face by watching hours of video on YouTube, then practicing imitating human lip movement by observing its reflection in a mirror.

In a study, the researchers demonstrated how the robot became capable of pronouncing words in multiple languages, and even singing a full song from its first album titled “hello world,” which was created using artificial intelligence.

The engineers confirm that the robot’s performance will improve over time, stating, “The more it interacts with humans, the better its performance becomes.”

Despite this notable progress, the research team acknowledges that the lip movement is not yet perfect. The robot faced difficulties with some strong sounds like the letter “B,” and those requiring lip closure like the letter “W,” but it is believed these issues will improve with more training and learning.

It is noted that much current humanoid robot research focuses on leg and hand movement for walking or grasping objects, but expressing emotions through the face is no less important, especially in applications requiring direct interaction with humans.

Integrating lip-syncing capability with interactive AI systems could add an entirely new dimension to the human-robot relationship, making interaction more natural and human.

Researchers expect these robots with “living faces” to find wide applications in fields such as:

- Entertainment.

- Education.

- Medicine.

- Elderly care.

Some economists estimate that over a billion humanoid robots could be manufactured in the next decade.

One comment states: “There is no future where humanoid robots are without faces. And if their eyes and lips do not move correctly, they will remain strange forever.”

This project is part of a long research journey spanning over ten years, aiming to make robots learn to communicate with humans instead of being programmed with rigid rules.

The statement concludes: “There is something enchanting that happens when a robot learns to smile or speak simply by watching and listening to humans. Even as a seasoned roboticist, I can’t help but smile when the robot smiles at me spontaneously.”